Moving to drlongnecker.com!

I’m finally taking the time to replace the domain redirect and house my blog, prototype site, and other fun toys over at http://drlongnecker.com.

This WordPress blog will remain for link joy and the information it contains (rather than export/import madness).

Stop by, say hi, and look forward to new posts coming soon!

-david

New Adventures Await with @Tracky!

After over a decade with the Wichita Public Schools, I’m starting a new adventure with fantastic folks at Tracky. I’m looking forward to the journey and opportunity to work with and learn from a fantastic team.

Onward!

Mashing CSVs around using PowerShell

Since I spend most of my day in the console, PowerShell also serves as my ‘Excel’. So, continuing my recent trend of PowerShell related posts, let’s dig into a quick and easy way to parse up CSV files (or most any type of file) by creating objects!

We, of course, need a few rows of example data. Let’s use this pseudo student roster.

Example data:

Student,Code,Product,IUID,TSSOC,Date 123456,e11234,Reading,jsmith,0:18,1/4/2012 123456,e11234,Reading,jsmith,1:04,1/4/2012 123456,e11234,Reading,jsmith,0:27,1/5/2012 123456,e11234,Reading,jsmith,0:19,1/7/2012 123456,e11235,Math,jsmith,0:14,1/7/2012

Now, for reporting, I want my ‘Minutes’ to be a calculation of the TSSOC column (hours:minutes). Easy, we have PowerShell–it can split AND multiple!

The code:

Begin by creating an empty array to hold our output, importing our data into the ‘pipe’, and opening up an iteration (for each) function. The final $out is our return value–calling our array so we can see our results.

$out = @()

import-csv data_example.csv |

% {

}

$out

Next, let’s add in our logic to split out the hours and minutes. We have full access to the .NET string methods in PowerShell, which includes .Split(). .Split() returns an array, so since we have HH:MM, our first number is our hours and our second number is our minutes. Hours then need to be multiplied by 60 to return the “minutes per hour.”

You’ll also notice the [int] casting–this ensures we can properly multiply–give it a whirl without and you’ll get 60 0’s or 1’s back (it multiples the string).

$out = @()

import-csv data_example.csv |

% {

$hours = [int]$_.TSSOC.Split(':')[0] * 60

$minutes = [int]$_.TSSOC.Split(':')[1]

}

$out

The next step is to create a new object to contain our return values. We can use the new PowerShell v2.0 syntax to create a quick hashtable of our properties and values. Once we have our item, add it to our $out array.

$out = @()

import-csv data_example.csv |

% {

$hours = [int]$_.TSSOC.Split(':')[0] * 60

$minutes = [int]$_.TSSOC.Split(':')[1]

$item = new-object PSObject -Property @{

Date=$_.Date;

Minutes=($hours + $minutes);

UserId=$_.IUID;

StudentId=$_.Student;

Code=$_.Code;

Course=$_.Product

}

$out = $out + $item

}

With that, we’re done, we can pipe it to an orderby for a bit of sorting, grouping, table formatting, or even export it BACK out as another CSV.

$out = @()

import-csv data_example.csv |

% {

$hours = [int]$_.TSSOC.Split(':')[0] * 60

$minutes = [int]$_.TSSOC.Split(':')[1]

$item = new-object PSObject -Property @{

Date=$_.Date;

Minutes=($hours + $minutes);

UserId=$_.IUID;

StudentId=$_.Student;

Code=$_.Code;

Course=$_.Product

}

$out = $out + $item

} | sortby Date, Code

$out | ft -a

Quick and dirty CSV manipulation–all without opening anything but the command prompt!

UPDATE: Matt has an excellent point in the comments below. PowerShell isn’t the ‘golden hammer’ for every task and finding the right tool for the job. We’re a mixed environment (Windows, Solaris, RHEL, Ubuntu), so PowerShell only applies to our Windows boxes. However, as a .net developer, I spend 80-90% of my time on those Windows boxes. So let’s say it’s a silver hammer. 🙂

Now, the code in this post looks pretty long–and hopping back and forth between notepad, the CLI, and your CSV is tiresome. I bounce back and forth between the CLI and notepad2 with the ‘ed’ and ‘ex’ functions (these commands are ‘borrowed’ from Oracle PL/SQL). More information here.

So how would I type this if my boss ran into my cube with a CSV and needed a count of Minutes?

$out=@();Import-Csv data_example.csv | % { $out += (new-object psobject -prop @{ Date=$_.Date;Minutes=[int]$_.TSSOC.Split(':')[1]+([int]$_.TSSOC.Split(':')[0]*60);UserId=$_.IUID;StudentId=$_.Student;Code=$_.Code;Course=$_.Product }) }; $out | ft -a

Now, that’s quicker to type, but a LOT harder to explain. 😉 I’m sure this can be simplified down–any suggestions? If you could do automatic/implied property names, that’d REALLY cut it down.

The Post-Certification Era?

Oh look, starting off with a disclaimer. This should be good!

These are patterns I’ve noticed in our organization over the past ten years–ranging from hardware to software to development technical staff. These are my observations, experiences with recruiting, and a good dash of my opinions. I’m certain there are exceptions. If you’re an exception, you get a cookie. 🙂

This isn’t specifically focused on Microsoft’s certifications. We’re a .NET shop, but we’re also an Oracle shop, a Solaris shop, and a RHEL shop. So many certification opportunities, so little training dollars.

Finally, I’ll also throw out that I have a few certifications. When I made my living as a full-time consultant and contractor and was just getting started, they were the right thing to do (read on for why). Years later … things have changed.

Evaluating The Post-Certification Era

In today’s development ecosystem, certifications seem play a nearly unmentionable role outside of college recruitment offices and general practice consulting agencies. While certifications provide a baseline for those just entering the field, I rarely see established developers (read: >~2 years experience) heading out to the courseware to seek a new certification.

Primary reasons for certifications: entry into the field and “saleability”.

Entry into the field – provides a similar baseline to compare candidates for entry-level positions.

Example: An entry-level developer vs. hiring an experienced enterprise architect. For an entry-level developer, a certification usually provides a baseline of skills.

For an experienced architect, however, past project experience, core understanding of architecture practices, examples of work in open source communities, and scenario-based knowledge provides the best gauge of skills.

“Saleability” of certifications for consulting agencies allows “one upping” other organizations, but usually lack the actual real-world skills necessary for implementation.

Example: We had a couple of fiascos years back with a very reputable consulting company filled with certified developers, but simply couldn’t wrap those skills into a finished product. We managed to bring the project back in-house and get our customers squared away, but it broke the working relationship we had with that consulting company.

Certifications provide a baseline for experience and expertise similar to college degrees.

Like in college, being able to cram and pass a certification test is a poor indicator (or replacement) for handling real-life situations.

Example: Many certification “crammers” and boot camps are available for a fee–rapid memorization and passing of tests. I do not believe that these prepare you for actual situations AND do not prepare you to continue to expand your knowledge base.

Certifications are outdated before they’re even released.

Test-makers and publishers cannot keep up with technology at it’s current pace. The current core Microsoft certifications focus on v2.0 technologies (though are slowly being updated to 4.0).

I’m sure it’s a game of tag between the DivDev and Training teams up in Redmond. We, as developers, push for new features faster, but the courseware can only be written/edited/reviewed/approved so quickly.

In addition, almost all of our current, production applications are .NET applications; however, a great deal of functionality is derived from open-source and community-driven projects that go beyond the scope of a Microsoft certification.

Certifications do not account for today’s open-source/community environment.

A single “Microsoft” certification does not cover a large majority of the programming practices and tools used in modern development.

Looking beyond Microsoft allows us the flexibility to find the right tool/technology for the task. In nearly every case, these alternatives provide a cost savings to the district.

Example: Many sites that we develop now feature non-Microsoft ‘tools’ from the ground up.

- web engine: FubuMVC, OpenRasta, ASP.NET MVC

- view engine: Spark, HAML

- dependency injection/management: StructureMap, Ninject, Cassette

- source control: git, hg

- data storage: NHibernate, RavenDB, MySQL

- testing: TeamCity, MSpec, Moq, Jasmine

- tooling: PowerShell, rake

This doesn’t even take into consideration the extensive use of client-side programming technologies, such as JavaScript.

A more personal example: I’ve used NHibernate/FluentNHibernate for years now. Fluent mappings, auto mappings, insane conventions and more fill my day-to-day data modeling. NH meets our needs in spades and, since many of our objects talk to vendor views and Oracle objects, Entity Framework doesn’t meet our needs. If I wanted our team to dig into the Microsoft certification path, we’d have to dig into Entity Framework. Why would I want to waste everyone’s time?

This same question applies to many of the plug-and-go features of .NET, especially since most certification examples focus on arcane things that most folks would look up in a time of crisis anyway and not on the meat and potatoes of daily tasks.

Certifications do not account for the current scope of modern development languages.

Being able to determine an integer from a string and when to call a certain method crosses language and vendor boundaries. A typical Student Achievement project contains anywhere from three to six different languages–only one of those being a Microsoft-based language.

Whether it’s Microsoft’s C#, Sun’s Java, JavaScript, Ruby, or any number of scripting languages implemented in our department–there are ubiquitous core skills to cultivate.

Cultivating the Post-Certification Developer

In a “Google age”, knowing how and why components optimally fit together provides far more value than syntax and memorization. If someone needs a code syntax explanation, a quick search reveals the answer. For something more destructive, such as modifications to our Solaris servers, I’d PREFER our techs look up the syntax–especially if it’s something they do once a decade. There are no heroes when a backwards bash flag formats an array. 😉

Within small development shops, such as ours, a large percentage of development value-added skills lie in enterprise architecture, domain expertise, and understanding design patterns–typical skills not covered on technology certification exams.

Rather than focusing on outdated technologies and unused skills, a modern developer and development organization can best be ‘grown’ by an active community involvement. Active community involvement provides a post-certification developer with several learning tools:

Participating in open-source projects allows the developer to observe, comment, and learn from other professional developers using modern tools and technologies.

Example: Submitting a code example to an open source project where a dozen developers pick it apart and, if necessary, provide feedback on better coding techniques.

Developing a social network of professional developers provides an instant feedback loop for ideas, new technologies, and best practices. Blogging, and reading blogs, allows a developer to cultivate their programming skill set with a world-wide echo chamber.

Example: A simple message on Twitter about an error in a technology released that day can garner instant feedback from a project manager at that company, prompting email exchanges, telephone calls, and the necessary steps to resolve the problem directly from the developer who implemented the feature in the new technology.

Participating in community-driven events such as webinars/webcasts, user groups, and open space discussions. These groups bolster existing social networks and provide knowledge transfer of best practices and patterns on current subjects as well as provide networking opportunities with peers in the field.

Example: Community-driven events provide both a medium to learn and a medium to give back to the community through talks and online sessions. This helps build both a mentoring mentality in developers as well as a drive to fully understand the inner-workings of each technology.

Summary

While certifications can provide a bit of value–especially getting your foot in the door, I don’t see many on the resumes coming across my desk these days. Most, especially the younger crowd, flaunt their open source projects, hacks, and adventures with ‘technology X’ as a badge of achievement rather than certifications. In our shop and hiring process, that works out well. I doubt it’s the same everywhere.

Looking past certifications in ‘technology X’ to long-term development value-added skills adds more bang to the resume, and the individual, than any finite-lived piece of paper.

DeployTo – a simple PowerShell web deployment script

We’re constantly working to standardize how builds get pushed out to our development, UAT, and production servers. The typical ‘order of operations’ includes:

- compile the build

- backup the existing deployment

- copy the new deployment

- celebrate

Pretty simple, but with a few moving parts (git push, TeamCity pulls in, compiles, runs deployment procedures, IIS (hopefully) doesn’t explode).

One step to standardize this has been to add these steps into our psake scripts, but that got tiring (and dangerous when we found a flaw). When in doubt, refactor!

First, get the codez!

DeployTo.ps1 and an example settings.xml file.

Creating a simple deployment tool – DeployTo

The PowerShell file, DeployTo.ps1, should be located in your project, your PATH, or wherever your CI server can find it–I tend to include it in a folder we have that synchronizes to ALL of our build servers automatically via Live Mesh. You could include it with your project to ensure dependencies are always met (for public projects).

DeployTo has one expectation, that a settings.xml file (or file passed in the Settings argument) will contain a breakdown of your deployment paths.

Example:

<site>

<name>development</name>

<path>\\server\webs\path</path>

</site>

With names and paths in hand, DeployTo sets about to match the passed in deployment location to what exists in the file. If one is found, it proceeds with the backup and deployment process.

Calling DeployTo is as simple as:

deployto development

Now, looping through our settings.xml file looking for ‘deployment’:

foreach ($site in $xml.settings.site) {

if ($site.name.ToLower() -eq $deploy.ToLower()) {

writeMessage ("Found deployment plan for {0} -> {1}." -f $site.name, $site.path)

if ($SkipBackup -eq $false) {

backup($site)

}

deploy($site)

$success = $true

break;

}

}

The output also lets us know what’s happening (and is helpful for diagnosing issues in your CI’s build logs).

Deploying to DEVELOPMENT Reading settings file at settings.xml. Testing release path at .\release. Found deployment plan for development -> \\server\site. Making backup of 255 file(s) at \\server\site to \\server\site-2012-02-10-105321. Backup succeeded. Removing existing files at \\server\site. Copying new release to \\server\site. Deployment succeeded. SUCCESS!

Backing up – A safety net when things go awry.

Your builds NEVER go bad, right? Deployments work 100% of the time? Right? Sure. 😉 No matter how many staging sites you test on, things can go back on a deployment. That’s why we have BACKUPS. I could get fancy and .7z/.gzip up the files and such, but a simple directory copy serves exactly what I need.

The backup function itself is quite simple–take a list directory of files, copy it into a new directory with the directory name + current date/time.

function backup($site) {

try {

$currentDate = (Get-Date).ToString("yyyy-MM-dd-HHmmss");

$backupPath = $site.path + "-" + $currentDate;

$originalCount = (gci -recurse $site.path).count

writeMessage ("Making backup of {0} file(s) at {1} to {2}." -f $originalCount, $site.path, $backupPath)

# do the actual file copy, but ignore the thumbs.db file. It's such a horrid little file.

cp -recurse -exclude thumbs.db $site.path $backupPath

$backupCount = (gci -recurse $backupPath).count

if ($originalCount -ne $backupCount) {

writeError ("Backup failed; attempted to copy {0} file(s) and only copied {1} file(s)." -f $originalCount, $backupCount)

}

else {

writeSuccess ("Backup succeeded.")

}

}

catch

{

writeError ("Could not complete backup. EXCEPTION: {1}" -f $_)

}

}

Deploying — copying files, plain and simple

Someday, I may have the need to be fancy. Since IIS automatically boots itself when a new web.config is added, I don’t have any ‘logic’ to my deployment scripts. We also, for now, keep our database deployments separate from our web view deployments. For now, deploying is copying files; however, who wants to do that by hand? Not me.

function deploy($site) {

try {

writeMessage ("Removing existing files at {0}." -f $site.path)

# force, because thumbs.db is annoying

rm -force -recurse $site.path

writeMessage ("Copying new release to {0}." -f $site.path)

cp -recurse -exclude thumbs.db $releaseDirectory $site.path

$originalCount = (gci -recurse $releaseDirectory).count

$siteCount = (gci -recurse $site.path).count

if ($originalCount -ne $siteCount)

{

writeError ( "Deployment failed; attempted to copy {0} file(s) and only copied {1} file(s)." -f $originalCount, $siteCount)

}

else {

writeSuccess ("Deployment succeeded.")

}

}

catch {

writeError ("Could not deploy. EXCEPTION: {1}" -f $_)

}

}

That’s it.

Once thing you’ll notice in both scripts is I am doing a bit of monitoring and testing.

- Do paths exist before we begin the process?

- Do the backed up/copied/original file counts match?

- Did anything else go awry so we can throw a general error?

It’s a work in progress, but has met our needs quite well over the past several months with psake and TeamCity.

NuGet Package Restore, Multiple Repositories, and CI Servers

I really like NuGet’s new Package Restore feature (and so does our git repositories).

We have several common libraries that we’ve moved into a private, local NuGet repository on our network. It’s really helped deal with the dependency and version nightmares between projects and developers.

I checked my first project using full package restore and our new local repositories into our CI server, TeamCity, the other day and noticed that the Package Restore feature couldn’t find the packages stored in our local repository.

I checked my first project using full package restore and our new local repositories into our CI server, TeamCity, the other day and noticed that the Package Restore feature couldn’t find the packages stored in our local repository.

At first, I thought there was a snag (permissions, network, general unhappiness) with our NuGet share, but all seemed well. To my surprise, repository locations are not stored in that swanky .nuget directory, but as part of the current user profile. %appdata%\NuGet\NuGet.Config to be precise.

Well, that’s nice on my workstation, but NETWORK SERVICE doesn’t have a profile and the All Users AppData directory didn’t seem to take effect.

The solution:

For TeamCity, at least, the solution was to set the TeamCity build agent services to run as a specific user (I chose a domain user in our network, you could use a local user as well). Once you have a profile, go into %drive%:\users\{your service name}\appdata\roaming\nuget and modify the nuget.config file.

Here’s an example of the file:

<?xml version="1.0" encoding="utf-8"?>

<configuration>

<packageSources>

<add key="NuGet official package source" value="https://msft.long.url.here" />

<add key="Student Achievement [local]" value="\\server.domain.com\shared$\nuget" />

</packageSources>

<activePackageSource>

<add key="NuGet official package source" value="https://msft.long.url.here" />

</activePackageSource>

</configuration>

Package Restore will attempt to find the packages on the ‘activePackageSource’ first then proceed through the list.

Remember, if you have multiple build agent servers, this must be done on each server.

Wish List: The option to include non-standard repositories as part of the .nuget folder. 🙂

Workaround: Oracle, NHibernate, and CreateSQLQuery Not Working

It’s difficult to sum this post up with a title. I started the morning adding (what I thought to be) a trivial feature to one of our shared repository libraries.

By the time I saw light at the end of the rabbit hole, I wasn’t sure what happened. This is the tale of my journey. All of the code is is guaranteed to work on my machine… usually. 😉

I’ve done this before–how hard could it be?

The full source code is available via a gist.

The initial need

A simple need really: take a complex query and trim it down to a model using NHibernate’s Session.CreateSQLQuery and Transformers.AliasToBean<T>.

The problems

So far, the only data provider I’ve had these problems with is Oracle’s ODP: Oracle.DataAccess. I’m not sure if the built-in System.Data.OracleClient.

Problem #1 – Why is EVERYTHING IN CAPS?

The first oddness I ran into seemed to be caused by the IPropertyAccessor returning the properties in ALL CAPS. When it tried to match the aliases in the array, [FIRSTNAME] != [FirstName]. Well, that’s annoying.

Workaround: Add an additional PropertyInfo[] and fetch the properties myself.

This method ignores the aliases parameter in TransformTuple and relies on a call in the constructor to populate the Transformer’s properties.

public OracleSQLAliasToBeanTransformer(Type resultClass)

{

// [snip!]

// this is also a PERSONAL preference to only return fields that have a valid setter.

_fields = this._resultClass.GetProperties(Flags)

.Where(x => x.GetSetMethod() != null).ToArray();

}

Inside TransformTuple, I then call on _fields rather than the aliases constructor parameter.

var fieldNames = _fields.Select(x => x.Name).ToList();

// [big snip!]

setters = new ISetter[fieldNames.Count];

for (var i = 0; i < fieldNames.Count; i++)

{

var fieldName = fieldNames[i];

_setters[i] = _propertyAccessor.GetSetter(_resultClass, fieldName);

}

Problem solved. Everything is proper case.

Bold assumption: I’m guessing this is coming back in as upper case because Oracle, by default, stores and retrieves everything as upper case unless it’s surrounded by quotes (which has it’s own disadvantages).

Problem #2 – Why are my ints coming in as decimals and strings as char[]?

This one I’m taking a wild guess. I found a similar issue for Hibernate (Java daddy of NHibernate), but didn’t see a matching NHibernate issue. It seems that the types coming in are correct, but the tuple data types are wrong.

For example, if an object as a integer 0 value, it returns as 0M and implicitly converts to decimal.

Workaround: Use System.Convert.ChangeType(obj, T)

If I used this on every piece of code, I’d feel more guilty than I do; however, on edge cases where the standard AliasToBeanTransformer won’t work, I chalk it up to part of doing business with Oracle.

Inside the TransformTuple method, I iterate over the fields and recast each tuple member accordingly. The only caveat is that I’m separating out enums and specifically casting them as int32. YMMV.

var fieldNames = _fields.Select(x => x.Name).ToList();

for (var i = 0; i < fieldNames.Count; i++)

{

var fieldType = _fields[i].PropertyType;

if (fieldType.IsEnum)

{

// It can't seem to handle enums, so convert them

// to Int (so the enum will work)

tuple[i] = Convert.ChangeType(tuple[i], TypeCode.Int32);

}

else

{

// set it to the actual field type on the property we're

// filling.

tuple[i] = Convert.ChangeType(tuple[i], fieldType);

}

}

At this point, everything is recast to match the Type of the incoming property. When all is said and done, adding a bit of exception handling around this is recommended (though, I’m not sure when a non-expected error might pop here).

Problem solved. Our _setters[i].Set() can now populate our transformation and return it to the client.

Summary

Lessons learned? Like Mr. Clarkson usually discovers, when it sounds easy, it means you’ll usually end up on fire. Keep fire extinguishers handy at all times.

Is there another way to do this? Probably. I could probably create a throwaway DTO with all capital letters then use AutoMapper or such to map it to the properly-cased objects. That, honestly, seems more mindnumbing than this (though perhaps less voodoo).

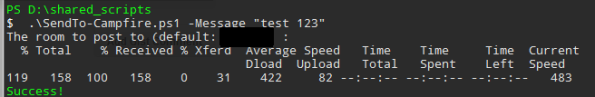

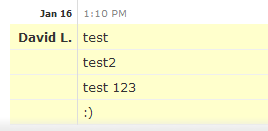

Posting to Campfire using PowerShell (and curl)

I have a few tasks that kick off nightly that I wanted to post status updates into our team’s Campfire room. Thankfully, 37signals Campfire has an amazing API. With that knowledge, time to create a quick PowerShell function!

NOTE: I use curl for this. The Linux/Unix folks likely know curl, however, I’m sure the Windows folks have funny looks on their faces. You can grab the latest curl here for Windows (the Win32 or Win64 generic versions are fine).

The full code for this function is available via gist.

I pass two parameters: the room number (though this could be tweaked to be optional if you only have one room) and the message to post.

param (

[string]$RoomNumber = (Read-Host "The room to post to (default: 123456) "),

[string]$Message = (Read-Host "The message to send ")

)

$defaultRoom = "123456"

if ($RoomNumber -eq "") {

$RoomNumber = $defaultRoom

}

There are two baked-in variables, the authentication token for your posting user (we created a ‘robot’ account that we use) and the YOURDOMAIN prefix for Campfire.

$authToken = "YOUR AUTH TOKEN"

$postUrl = "https://YOURDOMAIN.campfirenow.com/room/{0}/speak.json" -f $RoomNumber

The rest is simply using curl to HTTP POST a bit of JSON back up to the web service. If you’re not familiar with the JSON data format, give this a quick read. The best way I can sum up JSON is that it’s XML objects for the web with less wrist-cutting. 🙂

$data = "`"{'message':{'body':'$message'}}`""

$command = "curl -i --user {0}:X -H 'Content-Type: application/json' --data {1} {2}"

-f $authToken, $data, $postUrl

$result = Invoke-Expression ($command)

if ($result[0].EndsWith("Created") -ne $true) {

Write-Host "Error!" -foregroundcolor red

$result

}

else {

Write-Host "Success!" -foregroundcolor green

}

Indeed, there be success!

It’s important to remember that PowerShell IS extremely powerful, but can become even more powerful coupled with other available tools–even the web itself!

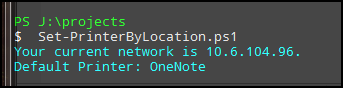

Changing Default Printers by Network Subnet

Windows 7 includes a pretty handy feature for mobile devices called location-aware printing. The feature itself is pretty cool and great if you’re moving between two distinct networks (home and work, for example). However, if you’re moving within the SAME network–and the SAME wireless SSID, it doesn’t register a difference. LAP doesn’t pay attention to your IP address, just the SSID you’re connected to.

In our organization, and most large corporations, wireless access points have the same name/credentials so that users can move seamlessly through the enterprise. How can we address location-based printing then?

One of my peers recently moved into a position where they are constantly between two buildings multiple times per day and frequently forgetting to reset their default printer.

Here’s how I helped her out using a bit of PowerShell.

For the full code, check out this gist.

To begin, we need to specify our IP subnets and the printers associated to them. As this gets bigger (say 4-5 sites), it’d be easier to toss this into a separate file as a key-value pair and import it.

$homeNet = "10.1.4.*", "OfficePrinter" $remoteNet = "10.1.6.*", "W382_HP_Printer"

Next, let’s grab all of the IP addresses currently active on our computer. Since we could have both wireless and wired plugged in, this returns an array.

$ipAddress = @()

$ipAddress = gwmi win32_NetworkAdapterConfiguration |

? { $_.IPEnabled -eq $true } |

% { $_.IPAddress } |

% { [IPAddress]$_ } |

? { $_.AddressFamily -eq 'internetwork' } |

% { $_.IPAddressToString }

Write-Host -fore cyan "Your current network is $ipAddress."

Our last step is to switch (using the awesome -wildcard flag since we’re using wildcards ‘*’ in our subnets) based on the returned IPs. The Set-DefaultPrinter function is a tweaked version of this code from The Scripting Guy.

function Set-DefaultPrinter([string]$printerPath) {

$printers = gwmi -class Win32_Printer -computer .

Write-Host -fore cyan "Default Printer: $printerPath"

$dp = $printers | ? { $_.deviceID -match $printerPath }

$dp.SetDefaultPrinter() | Out-Null

}

switch -wildcard ($ipAddress) {

$homeNet[0] { Set-DefaultPrinter $homeNet[1] }

$remoteNet[0] { Set-DefaultPrinter $remoteNet[1] }

default { Set-DefaultPrinter $homeNet[1] }

The full source code (and a constantly updated version available from gist).

$homeNet = "10.1.4.*", "OfficePrinter"

$remoteNet = "10.1.6.*", "W382_HP_Printer"

function Set-DefaultPrinter([string]$printerPath) {

$printers = gwmi -class Win32_Printer -computer .

Write-Host -fore cyan "Default Printer: $printerPath"

$dp = $printers | ? { $_.deviceID -match $printerPath }

$dp.SetDefaultPrinter() | Out-Null

}

$ipAddress = @()

$ipAddress = gwmi win32_NetworkAdapterConfiguration |

? { $_.IPEnabled -eq $true } |

% { $_.IPAddress } |

% { [IPAddress]$_ } |

? { $_.AddressFamily -eq 'internetwork' } |

% { $_.IPAddressToString }

Write-Host -fore cyan "Your current network is $ipAddress."

switch -wildcard ($ipAddress) {

$homeNet[0] { Set-DefaultPrinter $homeNet[1] }

$remoteNet[0] { Set-DefaultPrinter $remoteNet[1] }

default { Set-DefaultPrinter $homeNet[1] }

}

Tip: Using Spark Conditionals to Toggle CSS and JavaScript

The conditional attribute is a fantastic shortcut to toggle CSS, input boxes, and other elements on a page–and is something I don’t see used in very many examples. One of my favorites is applying classes to an element based on a output model property, such as a permission boolean.

Here’s an example.

In a recent project, a dashboard screen had several charts that toggled on and off based on the user’s preference. Rather than rebuild the screen each time, each class simply toggled an ‘enabled’ class based on a Model.{Property}.

<div class="charts">

<div id="enteredbycount" class="loading enabled?{Model.ShowEnteredBy}"

style="inlineChart">

</div>

<div id="schoolcount" class="loading enabled?{Model.ShowSchoolCount}"

style="inlineChart">

</div>

</div>

The Spark Conditional only renders the text preceding the conditional ?{} if the condition is true. In this example, if our Model.ShowSchoolCount returns false, enabled never renders and our chart (due to some styling), remains hidden and never posts back to the server to get the chart data (saving an unnecessary AJAX call).

By toggling a class, you can trigger a certain set of styles, events using JavaScript, or most anything else you can dream up.